Have you ever thought about why we can not perform brain tasks on our computers? Well of course I don’t mean a simple cat/dog recognition, or calculus (Computers have been specifically designed to do a very limited number of brain functions extremely well – even better than humans), but something bigger like analyzing new and unfamiliar situations.

To answer this question, let’s first see how conventional computers work:

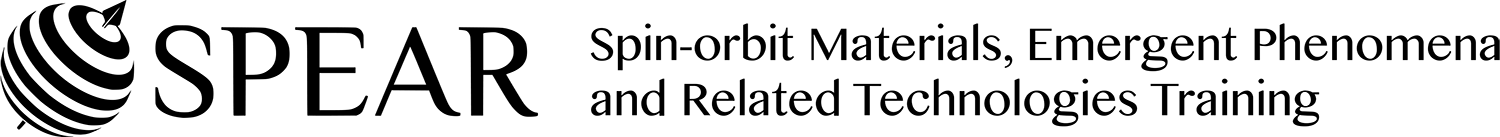

Simply explained, there are two main units: a processing unit to process and analyze the data and a memory unit to store them. These two blocks are separated from each other and every time that a task must be done, the data should go back and forth between these two units. This architecture is known as Von Neumann architecture [1].

As you’ve already found out, there are two issues with this architecture that makes it almost impossible to do heavy tasks with:

- Energy consumption, as the blocks are “separated” and lots of Joule heating can happen in between.

- Not fast enough, due to the time required for the data to go back and forth.

This is also known as the Von Neumann bottleneck [3]. In other words, the architecture causes a limitation on the throughput, and this intensive data exchange is a problem. To find an alternative for it, it’s best to take a look at our brain and try to build something to emulate it, because not only is it the fastest computer available, but it is super energy efficient.

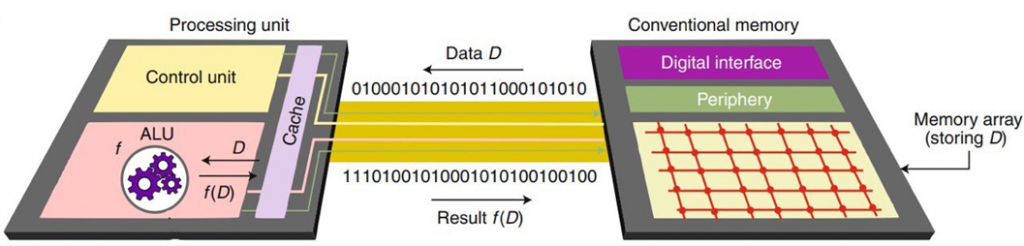

The brain is made up of a very dense network of interconnected neurons, which are responsible for all the data processing happening there. Each neuron has three parts: Soma (some call it neuron as well) which is the cell body and is responsible for the chemical processing of the neuron, Synapse which is like the memory unit and determines the strength of the connections to other neurons, and Axon which is like the wire connecting one neuron to the other.

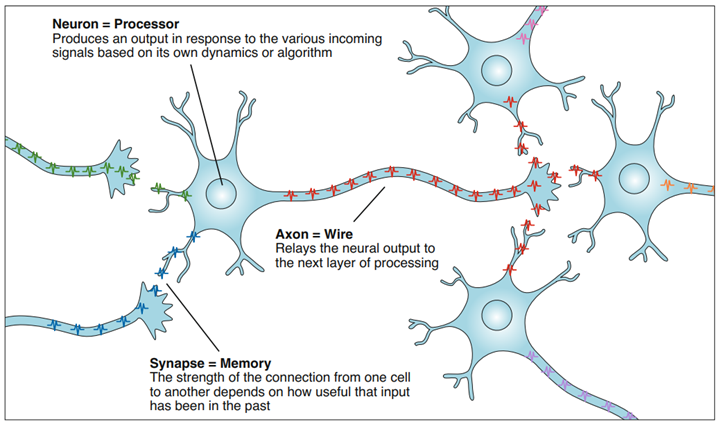

Neurons communicate with voltage signals (spikes) generated by the ions and the chemicals inside our brains. There have been many models presented on how they work, but here will be discussed the simplest (and probably the most useful) one: The leaky integrate and fire model [5].

As it was said earlier, neurons communicate with spikes which can change the potential of the soma. If a spike from a previous neuron arrives at a neuron after it, the potential of the soma increases. However, this is temporary, meaning that if no other spikes arrive afterward, the potential of the soma will reach the relaxed level again (leakage). On the other hand, if a train of spikes arrives at the neuron, they can accumulate (integrate) and if the potential reaches a threshold potential, the neuron itself will generate a spike (fire). After firing, the potential will again reach the relaxed level.

Apparently, the connections between all the neurons are not the same, and the differences are in the synapses. The form and combination of the synapses change in time depending on how active or inactive those two neurons were. The more they communicate, the stronger and better their connection, and this is called “synaptic plasticity”. (This is why learning something new is so hard because the connections between the neurons need time and practice to get better!). For more investigation into the fascinating world of the brain, this book is recommended: Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition [7].

Now, it’s time to get back to the Von Neumann bottleneck. With inspiration from the brain, it can be seen that it’s better to place the memory unit in the vicinity, or even inside, the processing unit (just like the soma and the synapses which are really close), this way so much time and energy can be saved. It is also obvious that the processing units are better to be nonlinear as in the brain, and the memory unit should be able to be changed or manipulated to mimic the synaptic plasticity. We know how different parts should behave in order to have a computer to at least function like the brain, but the big question is: What hardwares should be used? What kind of devices act like a neuron, or a synapse? And even if we find them, are we able to place them close to each other to overcome the Von Neumann bottleneck?

These are the questions that Neuromorphic computing tries to answer. In other words, it is an attempt to build new hardware to be able to do computing like our brain. Some of the most promising candidates here are the spin-orbit devices as they are super-fast, energy-efficient, and more importantly, nonlinear [8][9]. I will talk about them and their major role in this field more in detail in the second part of my post soon!

Please don’t hesitate to ask questions: mahak@chalmers.se

References:

1. Von Neumann, J. Papers of John von Neumann on computers and computer theory. United States: N. p., 1986. Web.

2. Sebastian, A., Le Gallo, M., Khaddam-Aljameh, R. et al. Memory devices and applications for in-memory computing. Nat. Nanotechnol. 15, 529–544 (2020).

3. John Backus. 1978. Can programming be liberated from the von Neumann style? a functional style and its algebra of programs. Commun. ACM 21, 8 (Aug. 1978), 613–641.

4. Bains, S. The business of building brains. Nat Electron 3, 348–351 (2020).

5. Brunel, N., van Rossum, M.C.W. Lapicque’s 1907 paper: from frogs to integrate-and-fire. Biol Cybern 97, 337–339 (2007).

6. Kurenkov, A., DuttaGupta, S., Zhang, C., Fukami, S., Horio, Y., Ohno, H., Artificial Neuron and Synapse Realized in an Antiferromagnet/Ferromagnet Heterostructure Using Dynamics of Spin–Orbit Torque Switching. Adv. Mater. 2019, 31, 1900636.

7. Gerstner, W., Kistler, W., Naud, R., & Paninski, L. (2014). Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition. Cambridge: Cambridge University Press.

8. Grollier, J., Querlioz, D., Camsari, K.Y. et al. Neuromorphic spintronics. Nat Electron 3, 360–370 (2020).

9. Zahedinejad, M., Fulara, H., Khymyn, R. et al. Memristive control of mutual spin Hall nano-oscillator synchronization for neuromorphic computing. Nat. Mater. 21, 81–87 (2022).